Hae Jong Seo and Peyman Milanfar

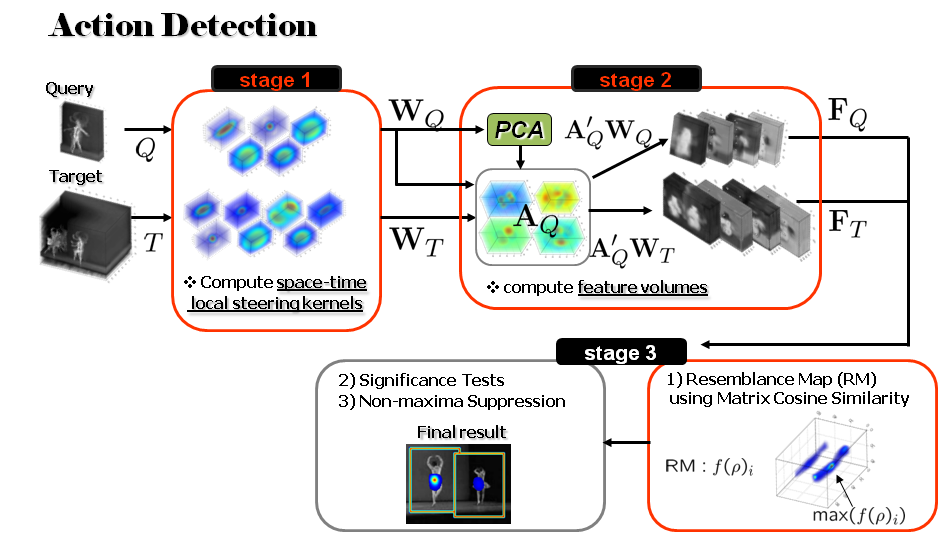

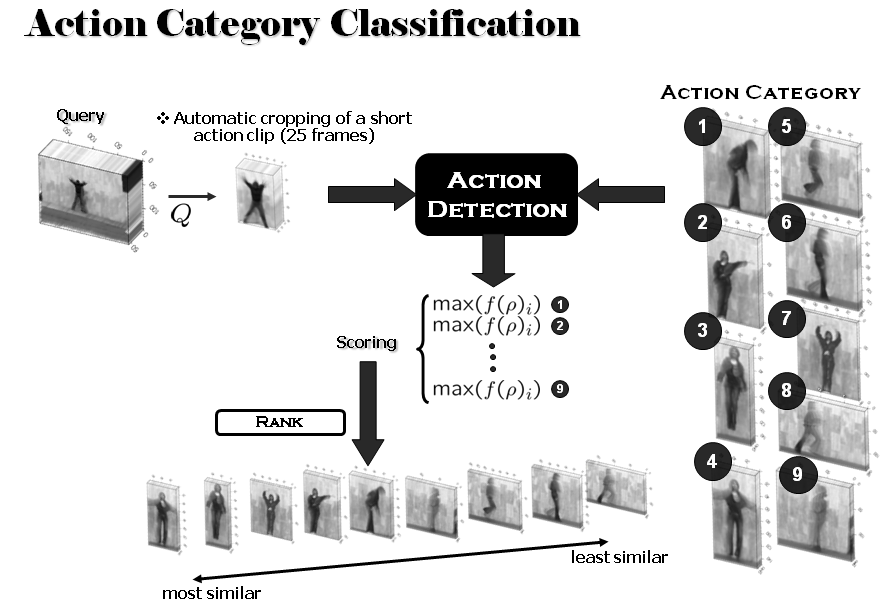

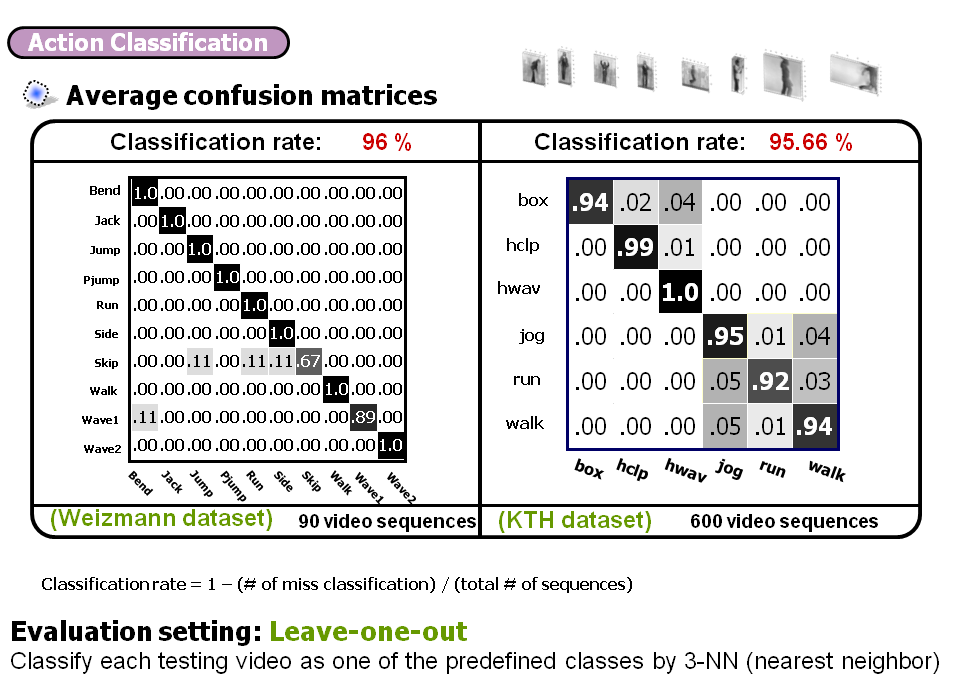

We present a novel human action recognition method based on space-time locally adaptive regression kernels and the matrix cosine similarity measure. The proposed method operates using a single example (e.g., short video clip) of an action of interest to find similar matches. It does not require prior knowledge (learning) about actions being sought; and does not require foreground/background segmentation, or any motion estimation or tracking. Our method is based on the computation of the so-called local steering kernels as space-time descriptors from a query video, which measure the likeness of a voxel to its surroundings. Salient features are extracted from said descriptors and compared against analogous features from the target video. This comparison is done using a matrix generalization of the cosine similarity measure. The algorithm yields a scalar resemblance volume with each voxel here, indicating the likelihood of similarity between the query video and all cubes in the target video. By employing nonparametric significance tests and non-maxima suppression, we detect the presence and location of actions similar to the given query video. High performance is demonstrated on the challenging set of action data indicating successful detection of actions in the presence of fast motion, different contexts and even when multiple complex actions occur simultaneously within the field of view of the camera. Further experiments on the Weizmann dataset and the KTH dataset for action categorization task demonstrate that the proposed method achieves improvement over other (state-of-the-art) algorithms.

See more details and examples in the following papers .

- Hae Jong Seo, and Peyman Milanfar, " Detection of Human Actions From A Single Example", IEEE International Conference on Computer Vision (ICCV), Kyoto, September, 2009

- Hae Jong Seo, and Peyman Milanfar, "Action Recognition from One Example", IEEE Trans. on Pattern Analysis and Machine Intelligence, vol. 33, no. 5 , pp. 867 - 882, May 2011

System Overview

|

|

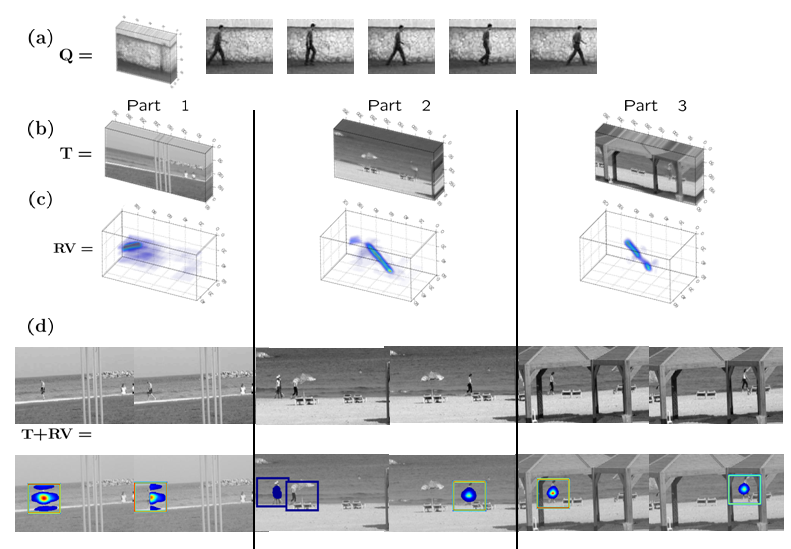

Results on the Shechtman's Action Dataset

|

(b) Target Video

(c) Resemblance Volume

(d) Detected Actions

|

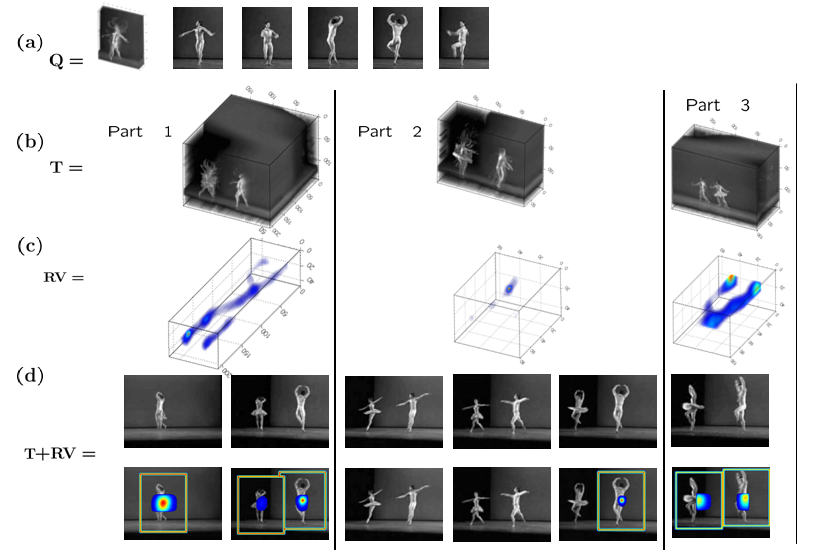

(a) Query video

(b) Target Video

(c) Resemblance Volume

(d) Detected Actions

|

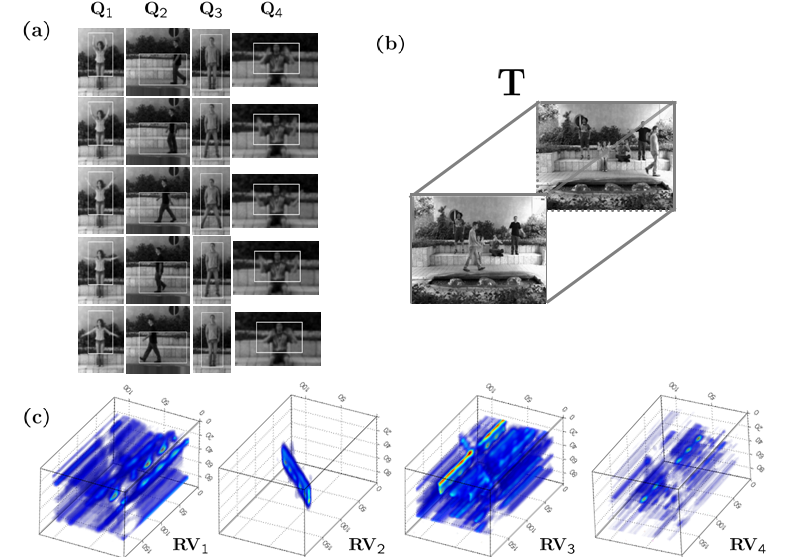

(a) Multiple Query videos

(b) Target Video

(c) Resemblance Volumes

|

Matlab Package

Disclaimer: This is experimental software. It is provided for non-commercial research purposes only. Use at your own risk. No warranty is implied by this distribution. Copyright ©

2010 by University of California.