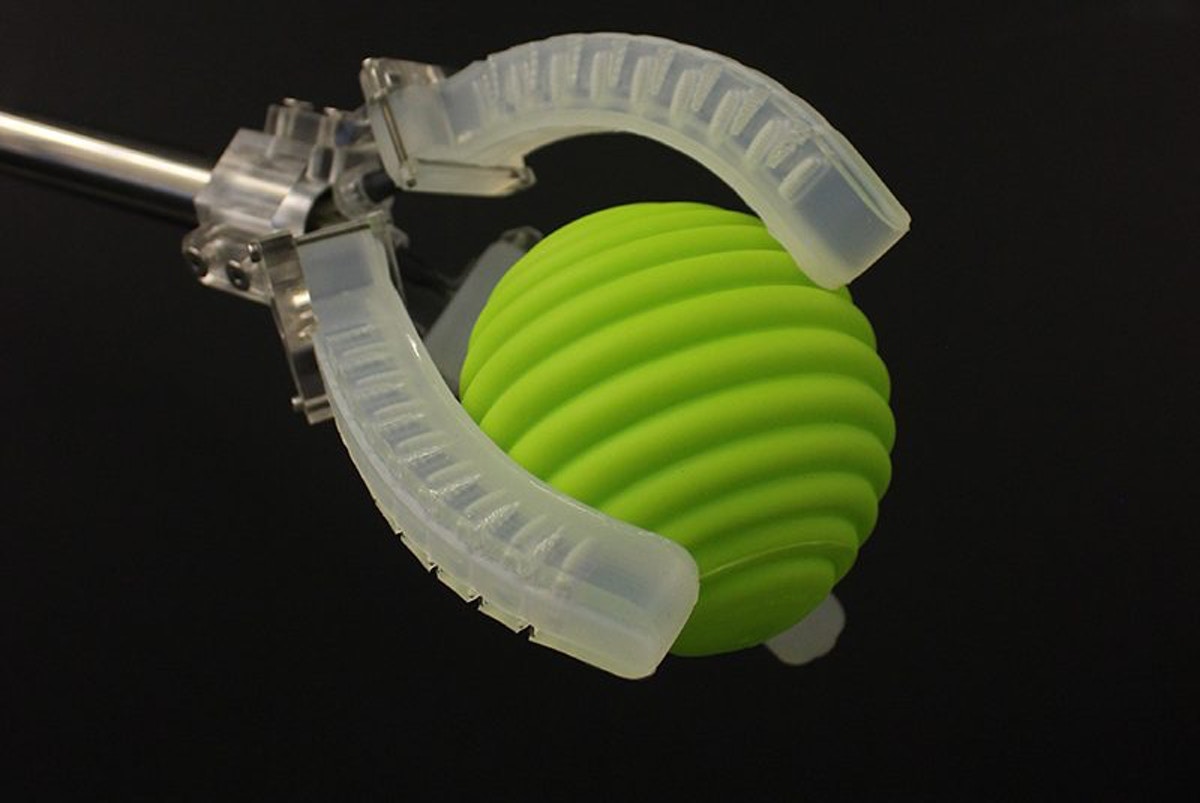

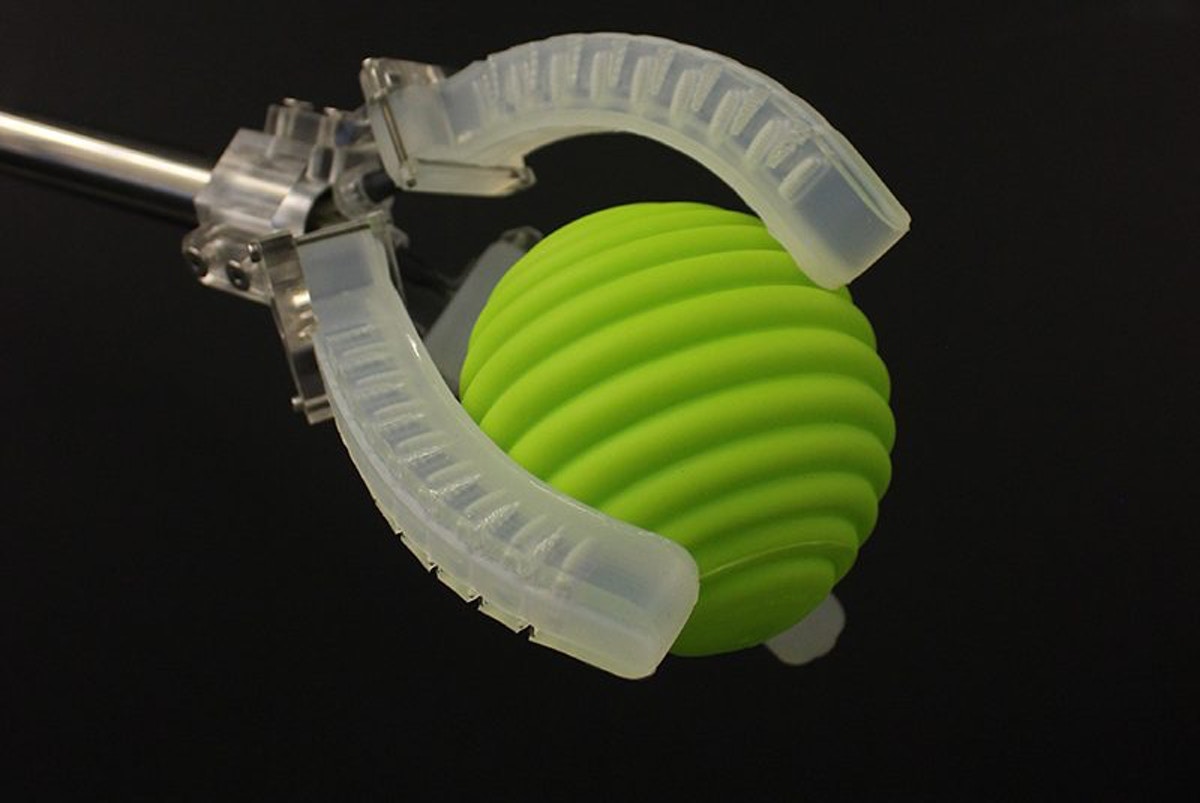

Visualization of a Somatosensitive Actuator

The Electrical and Computer Engineering department in University of California Santa Cruz has developed a soft somato-sensitive actuator (Picture Up). The actuator is in a shape of a finger which is driven by the compressed air to bend or flick. To design a computer algorithm to control the actuator, a math model between the motion tracks of the actuator and the input pressure is necessary. An Effective data visualization method is essential to obtain the math model. To achieve the data visualization, I explore to put some reference points on the actuator, use the OpenCV library via python to do the motion tracking of a testing actuator and extract the graph coordinates. The matlab toolbox can provide me a way of plotting the graph via the graph coordinates.

The Project is divided into three parts, actuator creation; motion Tracking and data visualization.

The Machine Interaction Lab in University of California Santa Cruz has successfully developed molds for the Soft-Robotic-Gripper. The matertial to pour in the molds is the Dragon Skin 30. The whole process includes mixing the dragon skin in the mold, vacuuming the mold and bake the whole part in the oven at 60 Celsius degree for 10 minutes.

Motion Tracking:

OpenCV and python language is a good choice to achieve the motion tracking part. To run the code, Jupyter notebook is one of the good platform. It runs on a browser without considering building enviornment. All the software and library here are open-source. With both software installing, a video of the an actuator sticking some reference points is recorded as an input. A code that is written to by Python using OpenCV to run on Jupyter notebook has the feature to detect the reference point and extract the pixel coordinates of the center of the reference point as a csv file. For more information click Motion-Tracking-code OpenCV Jupyter Notebook.

Matlab is one of the powerful tool to sort the data from csv file generated by OpenCV and do the data visualization. The whole script has two major parts.

The videos downwards are the input video and motion traces.

Great thanks to Max Belman and Jacqueline Clow in University of California Santa Cruz for their help on the motion tracking code compliation. Great thanks to Colin Martin for his help of video capturing of the actuator.