| |

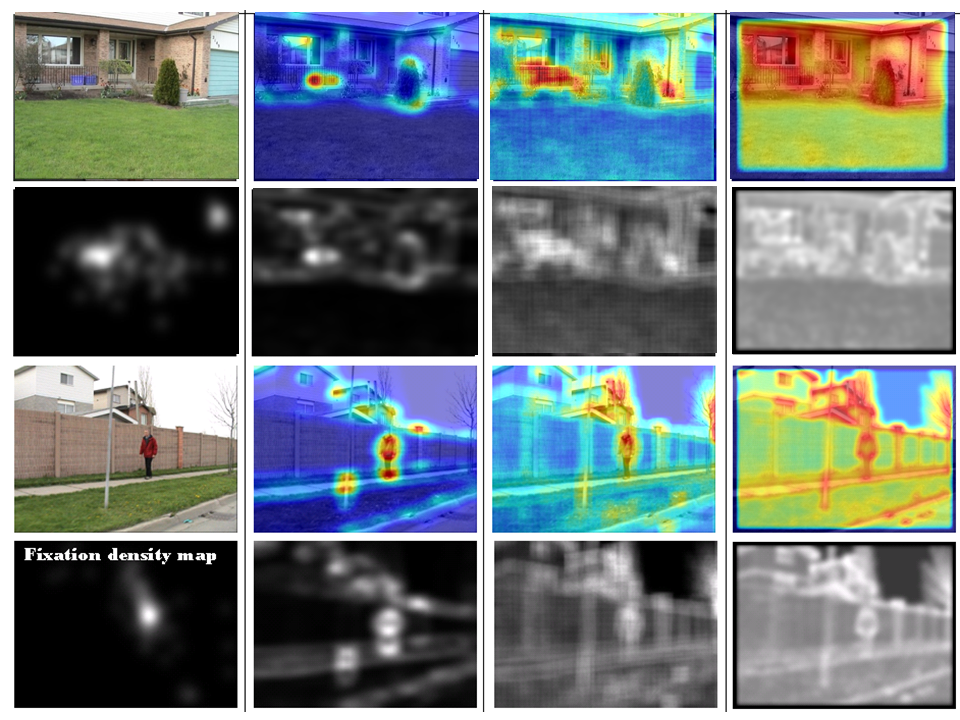

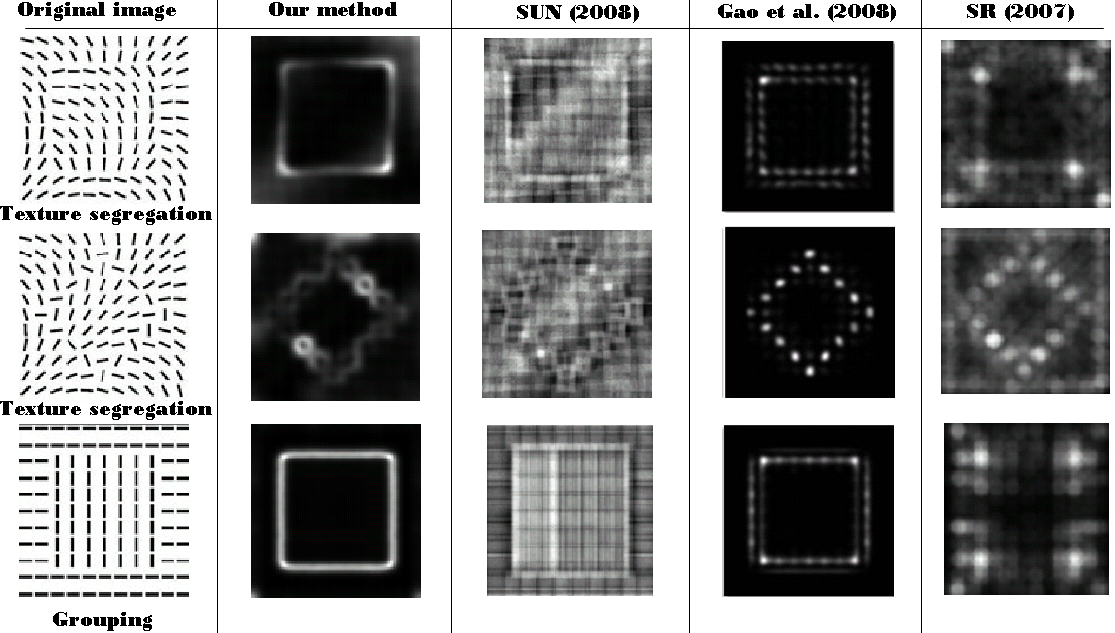

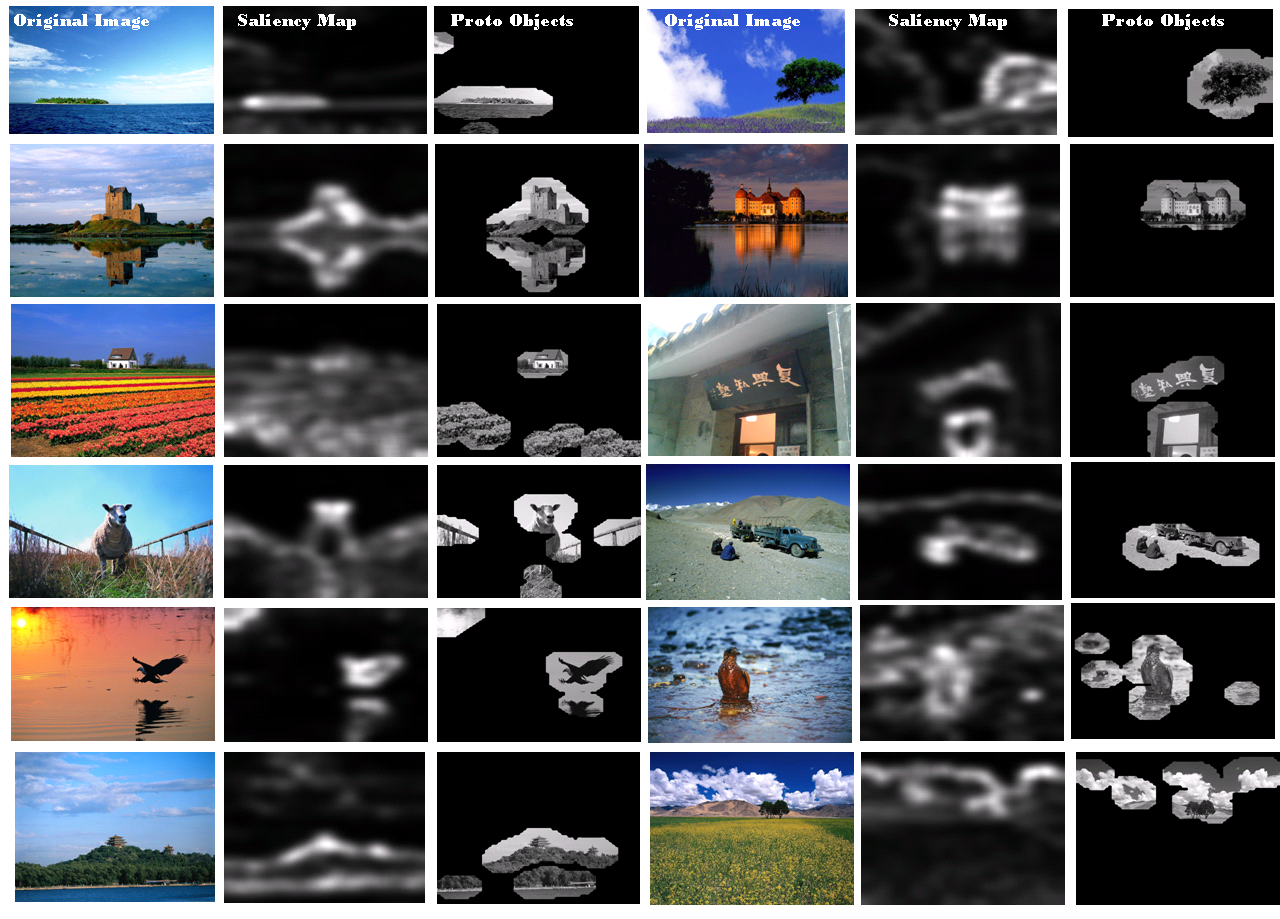

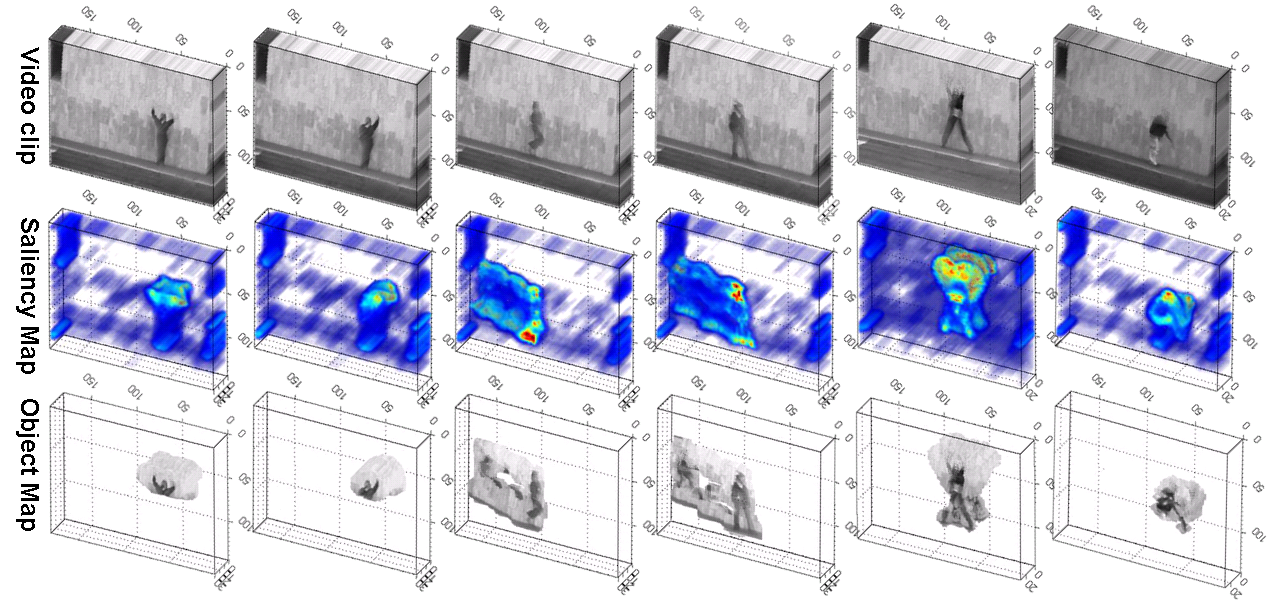

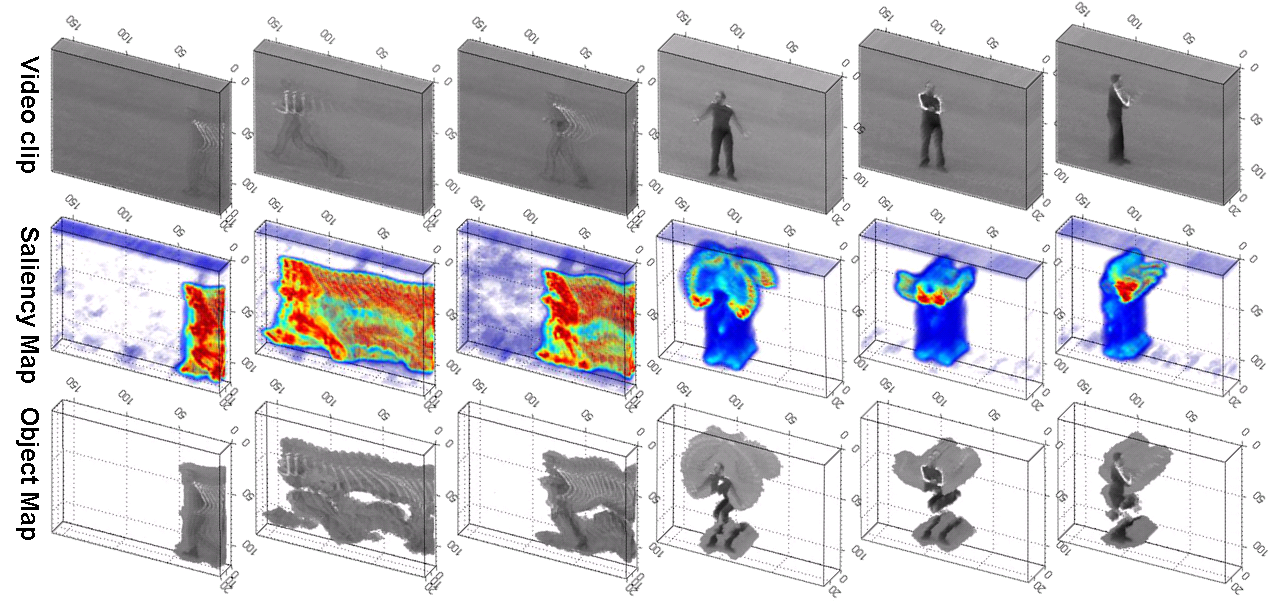

We present a novel unified framework for both static and space-time saliency detection. Our

method is a bottom-up approach and computes so-called local regression kernels (i.e., local

descriptors) from the given image (or a video), which measure the likeness of a

pixel (or voxel) to its surroundings. Visual saliency is then computed using

the said ``self-resemblance" measure. The framework results in a

saliency map where each pixel (or voxel) indicates the statistical likelihood

of saliency of a feature matrix given its surrounding feature

matrices. As a similarity measure, matrix cosine similarity (a

generalization of cosine similarity) is employed. State of the art

performance is demonstrated on commonly used human eye fixation

data (static scenes and dynamic scenes) and some psychological patterns.

System Overview

Experimental Results

Matlab Toolbox (New release)

See more details and examples in the following papers

.

Go to object detection page

Go to action detection page

|

| |

The package contains the software that can be used to compute the visual saliency map, as explained in the JoV paper above.

The included demonstration files (demo*.m) provide the easiest way to learn how to use the code.

Disclaimer: This is experimental software. It is provided for non-commercial research purposes only. Use at your own risk. No warranty is implied by this distribution. Copyright ©

2010 by University of California.

File updated: Dec 13 2011

|

|