About Me

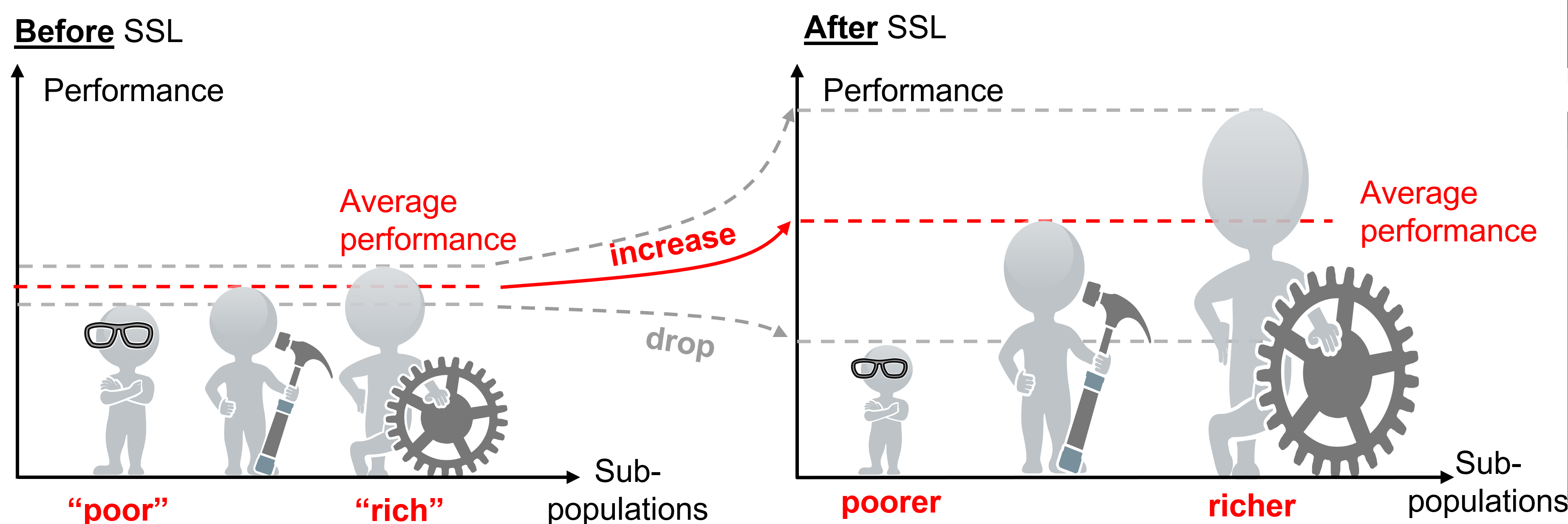

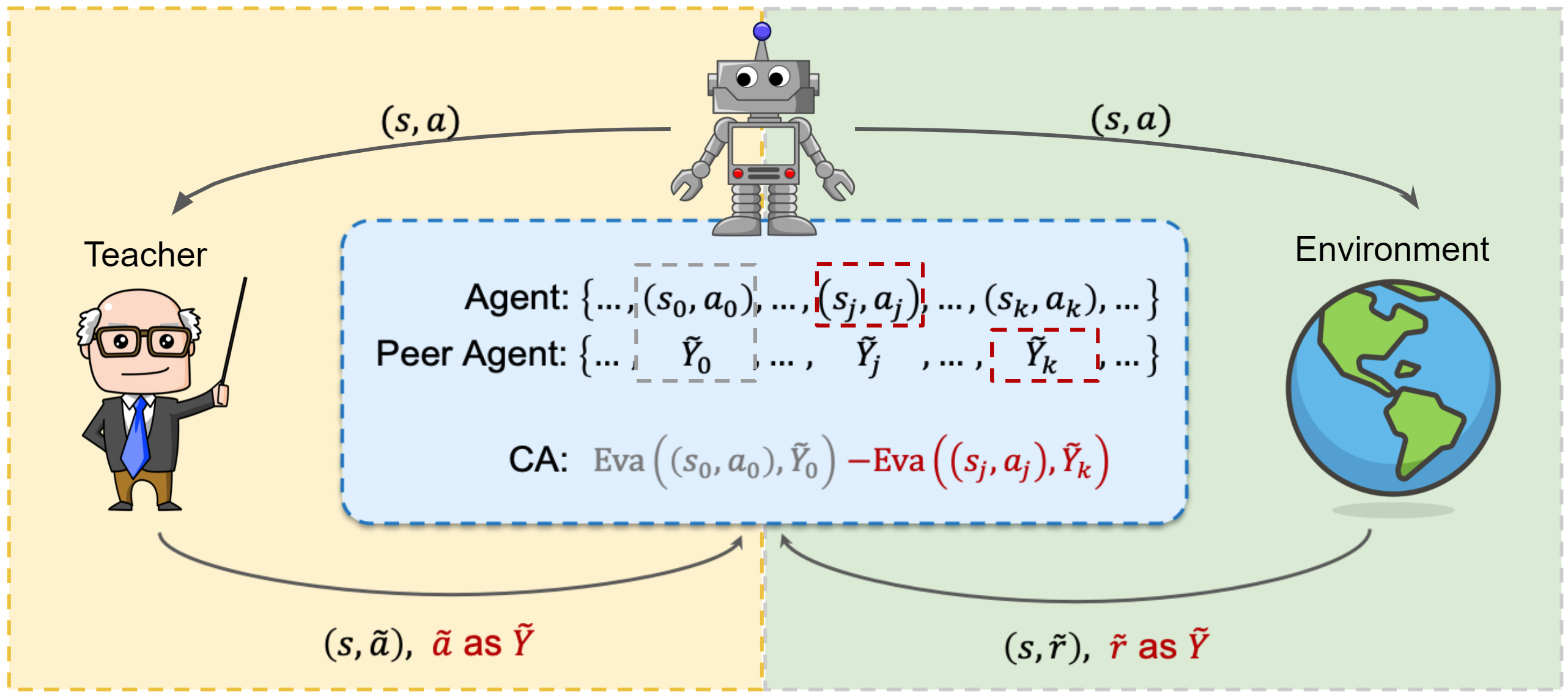

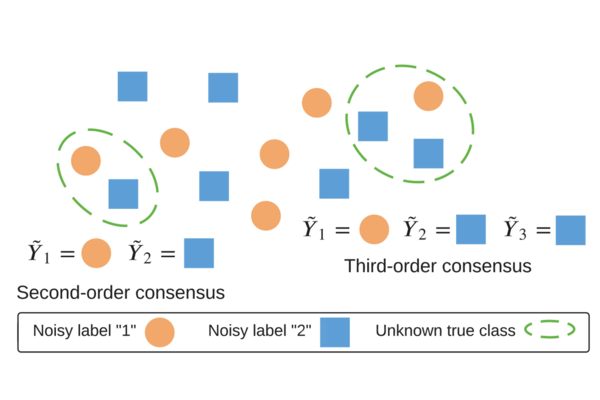

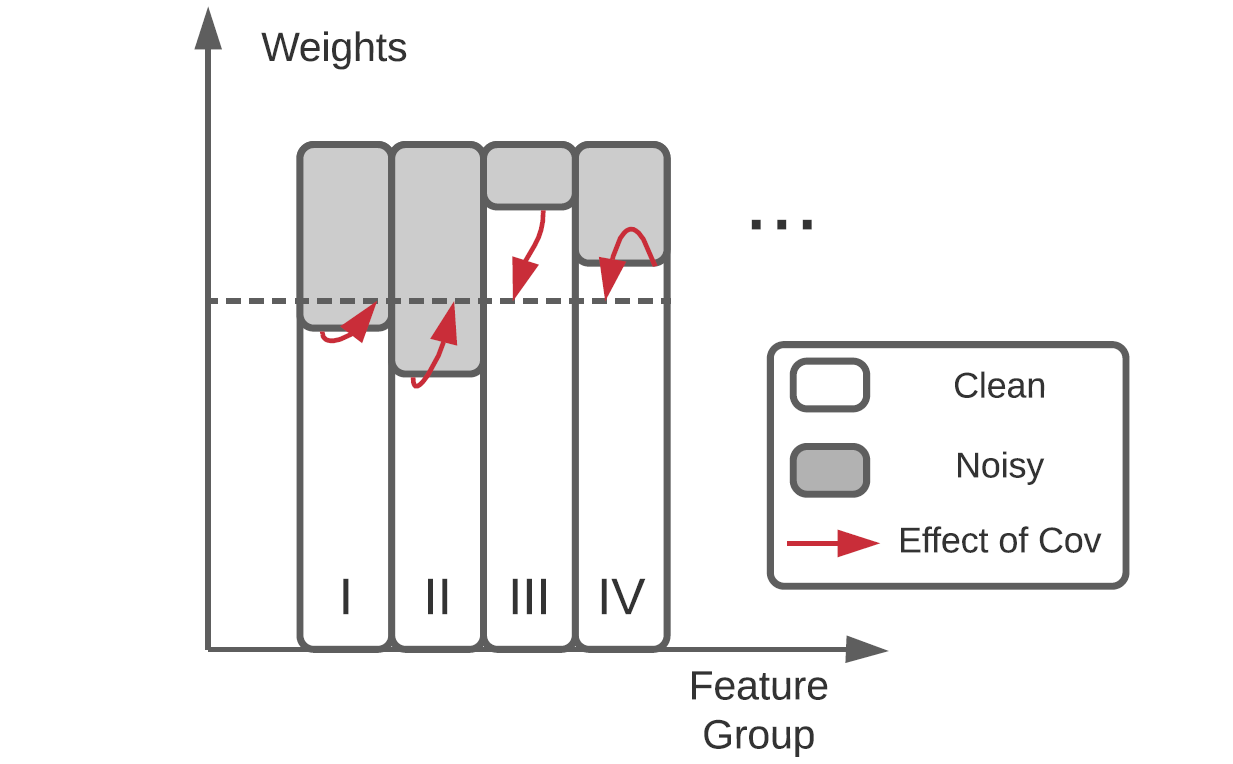

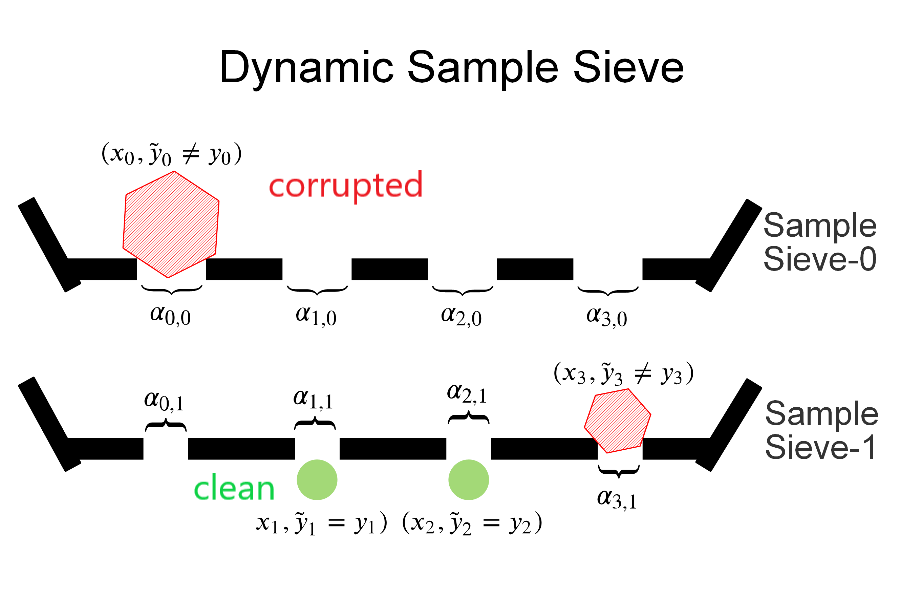

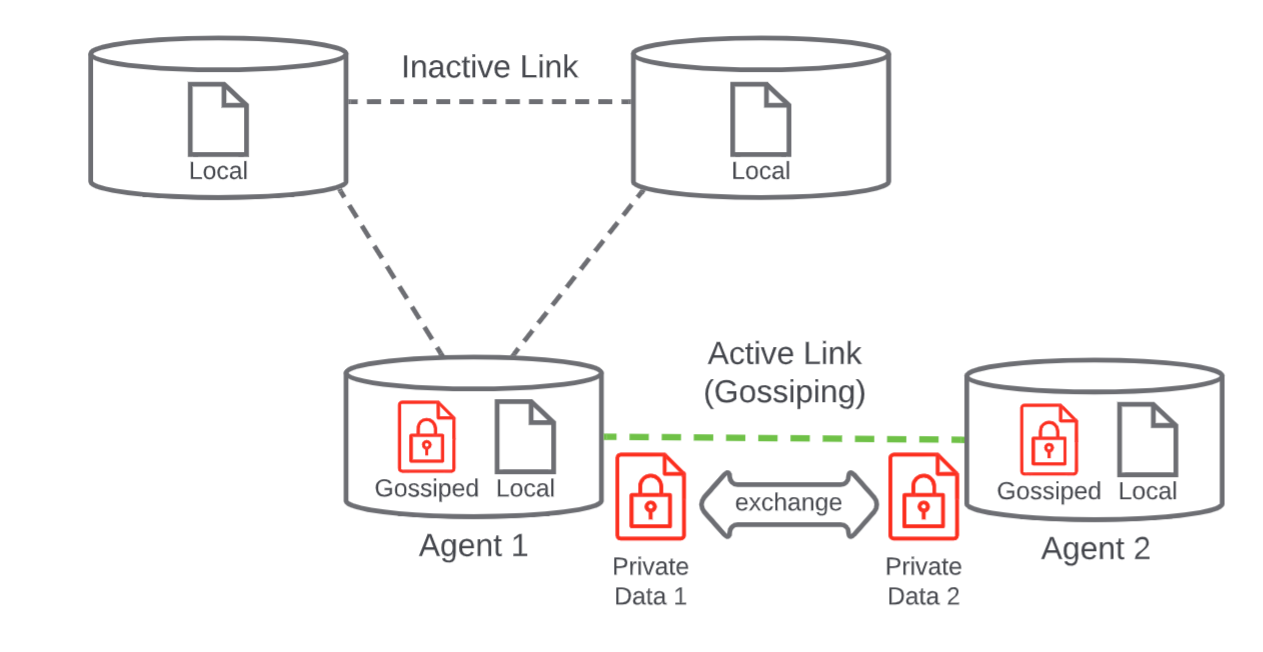

I am a Ph.D. candidate at University of California, Santa Cruz, CA, USA. I am working with Professor Yang Liu. I have general research interests in responsible, explainable, and trustworthy AI. My current research includes weakly-supervised learning (e.g., label noise, semi-supervised learning, self-supervised learning), fairness in machine learning, and federated learning. I am particularly interested in handling the bias in machine learning datasets and algorithms.

Prior to UCSC, I received the B.S. degree from the University of Electronic Science and Technology of China (UESTC), Chengdu, China, in 2016, under the supervision of Prof. Wenhui Xiong, and received the M.S. degree (with honor) from ShanghaiTech University, Shanghai, China, under the supervision of Prof. Xiliang Luo.

Resume Publication Google Scholar