#gallery Final Project Report

Shahar Zimmerman | CS160 Spring '15 Pang

App architecture

The app uses an MVC-like architecture. The controller is a Node.js program hosted on an Amazon EC2 instance. A server instance is required to hide senstitive API keys from the public. Another controller, an Angular.js program, is delivered to the client; this controller does the brunt of the work, dealing with Firebase no-SQL data store and Amazon S3 image storage. The data (model) is stored and pulled from Firebase on the client. The reason we connect the client to the database, rather than the server, is for better integration with the Angular data-binding. All the views are delivered to the client, and are managed by the Angular program.

The data must store the following information: whether the user has created a gallery, which images and how many have been uploaded, metadata about those images.

Note: Submitted directory is a demo app and is not integrated with these services. To see the app in real action, go to cs160.shaharzimmerman.com

Graphics

All graphics are done in three.js.

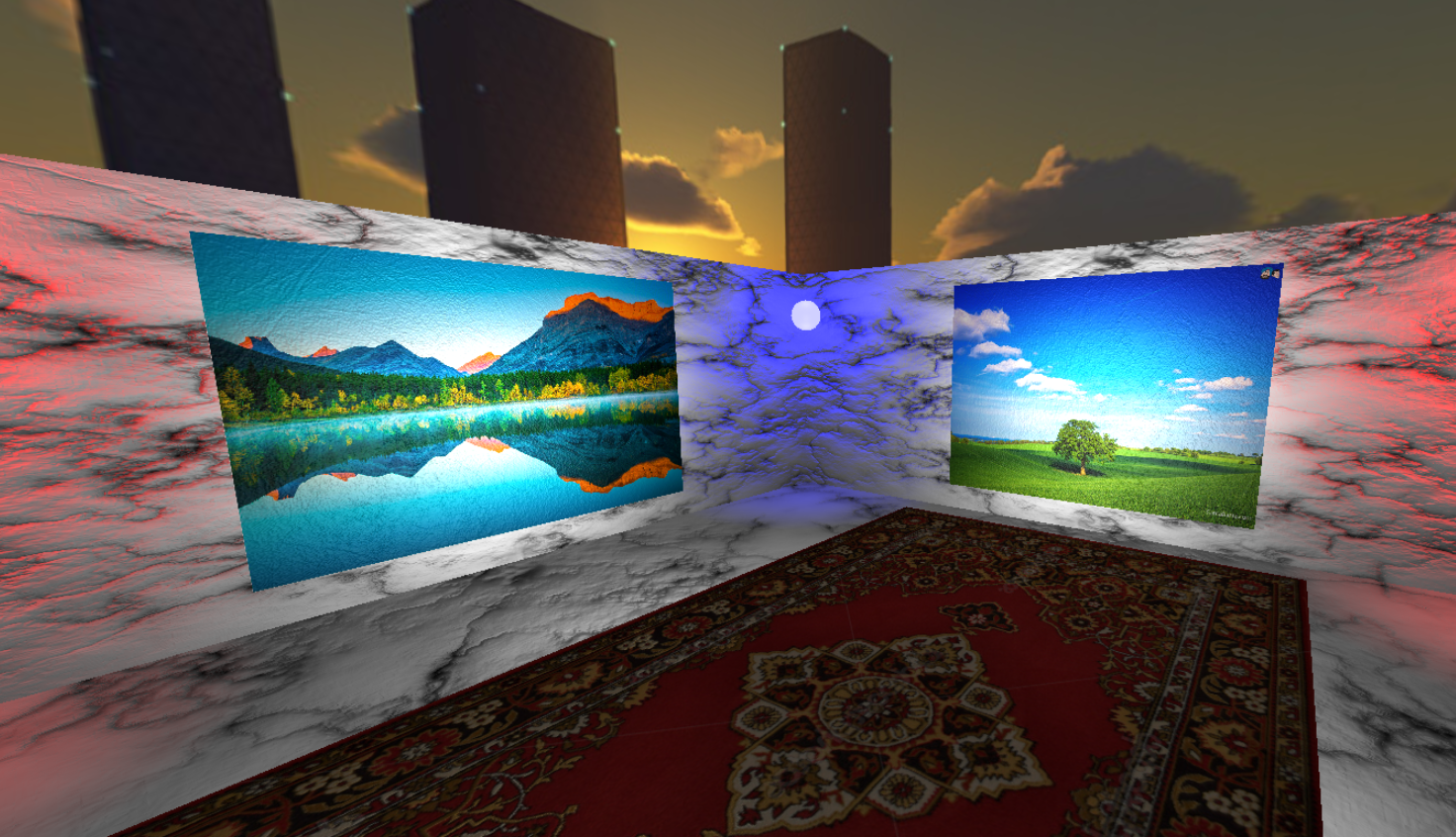

Room polygons and textures

The walls are rectangle polygons that have been rotated and translated to predetermined positions. Walls and floor polygons are associated with the same material object, called "white_marble". This material association essentially tells fragment shaders to UV texture-map from an image sample, as well as bump-map based on the resulting fragment color. As illustrated in the left image, normals of darker fragments have a smaller theta with the polygon surface.

Symmetric point lights (with emmissive sphere polygons) have been positioned in the room's corners to better illustrate the effect of multidirectional light on the texture mapping

Artworks

Each 'artwork', or image texture-mapped onto a rectangle polygon, is UV-mapped using a GL.NEAREST filter strategy, proportionally to its original dimensions while still fitting in the wall. Rectangle polygons, mathematically similar to original image dimensions, ensure there is no effective distortion in the 3d images vs. the artist's original images. Because canvas isn't perfectly smooth, artworks are bump-mapped based on an canvas-like image loaded off-frame. Images also have a slightly increased spectral factor to increase glossiness to simulate dried oil paint.

Sky and additional lighting

Lighting, skybox, camera angles

Sky simulation was accomplished using a skybox technique, where each side of a very large cube polygon is UV mapped with a specific image, and the cube is centered with respect to the scene. With backface culling applied, the resulting effect is a sort of "shoebox" environment where the viewer can rotate and see only the inside faces of the cube. As the images themselves are of a city at sunset, slight ambient lighting is applied to account for the lighting effects of the sky.

Although ambient lighting is used to illuminate the scene slightly, support lighting is needed to properly illuminate the artworks. This is accomplished using simple three.js spotlight constructs. Color is pure white and angle is 45 degrees. One spotlight, centered at the origin and facing an artwork, is automatically added for each picture that enters the scene.

Camera angles

Custom camera angles were implemented using a native Javascript event listener on the canvas element, with the handler function that calls a camera-moving function. Camera viewing coordinates are adjusted by specifying an up vector, lookFrom vector, and lookAt vector.