The projects I participated in

Data Augmentation for Rare Traffic Signs to Boost the Performance of Detection

When training the detection model for traffic signs, because it is extremely hard to collect photos of some categories, we need to explore a new way to obtain enough data to train a robust and accurate model. Here, we initialized research on the application of style transformation on traffic object generation, which means to produce scarce traffic sign categories in real traffic scenes photos.

Because of the policy, no detail or code can be shared publicly.

- Initiated research on style transfer to generate rare traffic signs in real traffic scenes, providing more balanced dataset in the following task of traffic sign detection

- Analyzed distribution of traffic signs from 6 categories in 500k images of real traffic scenes, created synthesized images by replacing existing ones with minor traffic signs to obtain balanced class in each category

- Implemented WCT (whitening and coloring transform) and local smoothing algorithm with Pytorch to transfer style from context to traffic sign objects in the synthesized images

- Achieved 94% recall rate in traffic sign detection using the augmented balanced images (35% using original imbalanced dataset and 98% using real balanced dataset under the same 0.1 FPPI)

NVIDIA AICity Challenge 2019 (CVPRW 2019)

In this challenge, I collaborated with students and professors from three different universities. Our algorithms rank 8th, 13th, and 3rd on three tracks respectively among dozens of teams from academia and industry. During the competition, I held weekly meetings, covered the work on track-3 and contributed to the other two tracks.

- Collaborated with professors and students from three universities; held and participated in weekly meetings; independently accomplished the track-3 task and contributed to track-1 and track-2

- Polished the framework I proposed in last year's Challenge for detection and tracking

- Trained a FPN-R-FCN vehicle detection model on more vehicle data and improve the classification model

- Replaced the simple IoU strategy with DaSiamRPN single object tracking algorithms to obtain more accurate timestamps during backtracking in origin video

- Achieved a competitive result of 0.7027 F1-score and 7.4679 RMSE on a much harder dataset compared with last year's challenge

Advanced Driving Assistance System (ADAS)

This is a project I participated during the internship at Sensetime. Our ADAS system is used in Honda self-driving. My job is to improved the performance of detection model on traffic objects, including traffic light (four status), traffic sign (20 categories) and PVB (pedestrain, vehicle, bike). Besides, due to the limited computation resource, I fused all model together by using shared convolution layers while maintaining the perfect performance.

This project cannot be put on Git cause of the policy.

- Innovated a multi-task architecture in Caffe to accomplish three traffic object detection tasks simultaneously, namely PVB (Pedestrian, Vehicle and Bike) detection, traffic light detection and traffic sign detection

- Shared backbone across three detectors consisted of RPN and Fast R-CNN, which was updated by normalized gradients coming from different detectors

- Exploited mimic learning to refine detectors towards the performance of individually trained networks

- Met Honda’s requirements with significantly increased training and inference speed as well as reduced GPU storage

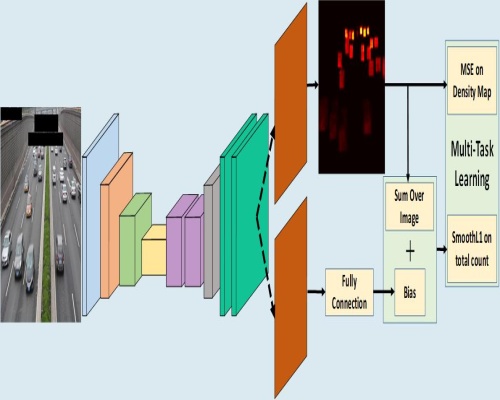

Understanding Vehicle Density in Traffic Surveillance

When talking about understanding the traffic, the number of vehicles on road is a key to describe the congestion degree. While it is hard for detection models to predict in hard cases (like traffic jams or bad weather). Therefore, borrowing the ability of crowd density estimation methods to work in extreme congestion, I designed an FCN, whose backbone is Inception-v3, to predict the density map. To obtain the accurate estimation, during training I implemented two losses on density map and bias respectively. The bias means the difference between the ground truth of the total number and the number summed on the predicted density map. Finally, the model outperforms the detection based method and runs in real-time.

View more on Github.

- Used a density prediction model with better robustness for traffic jam and low-quality video to obtain the total number of vehicles in real-time

- Designed an FCN with inception-v3 as the backbone to predict density map

- Trained the model with two losses to restrain on density map and total number respectively

- Achieved 94.2% accuracy with speed of 20fps and outperformed the detection-based model

Unsupervised Anomaly Detection for Traffic Surveillance (CVPRW 2018)

This work is for NVIDIA AI CITY CHALLENGE 2018 track-2, traffic anomaly detection in surveillance video. We figure out the nature among all broken vehicles, that is whenever an anomaly happens, it leads to at least one stopped vehicle, which becomes part of the video background. According to this finding, we designed a framework and our algorithm ranks the 2nd in the final competition.

View more on Github.

Also, you can find the demo video here, and paper is here.

- Designed a novel unsupervised system to detect abnormal vehicles in traffic surveillance videos that could adapt to various scenes without special treatment

- Utilized MOG2 to capture the background frames and implement Faster-RCNN to detect vehicles in background frames, as the abnormal vehicles stay in the video background for a long time; trained a VGG as the classifier to eliminate false detected bounding boxes

- Trained a ReID model, whose backbone is ResNet, with triplet loss to complete the similarity comparison when meeting camera-movement or vehicles waiting for the traffic light

- Designed a decision model to determine whether a detected vehicle is belonged to anomaly according to the bounding boxes obtained above

- Achieved 0.81 F1-score and 10.2 RMSE in traffic anomaly detection dataset on NVIDIA AI CITY Challenge

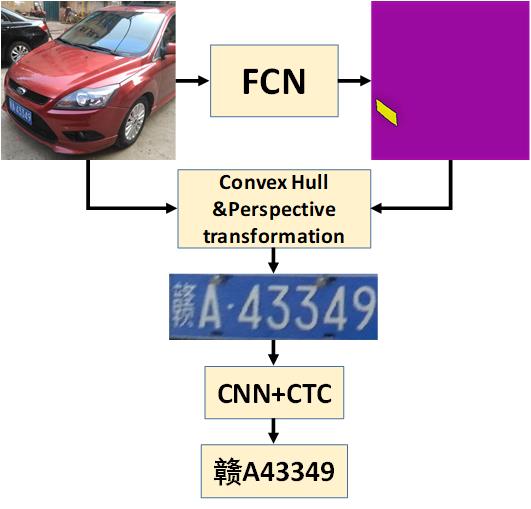

License Plates Recognition Based on Segmentation and Multi-Label Classification

As we all know, almost all existing license plate recognition algorithm can only be utilized in homogeneous scenes. Therefore, I built a license plate recognition system to recognize the Chinese license plate from multi-oriented images which could handle all kinds of scenes in real-time with great robustness. My system can work in real-time and real-life without any specious modification.

View more on Github.

Contact me if want to view more or get my data.

- Built a license plate recognition system to detect and recognize the multi-oriented Chinese license plate in kinds of scenes in real-time with great robustness

- Trained a segmentation model to perform pixel-wise classification and obtain the pure license plate area on wild vehicle images, to avoid the inference of background caused by using detection to locate license plate

- Obtained the quadrilateral envelope according to the context hull of the license plate area, and then transform the quadrilateral to rectangle through perspective transformation.

- Designed a CNN with CTC loss to recognize license plate characters end-to-end on rectangular license plate images; trained the classification model on 100k simulated images and fine-tuned on real data due to the lack of annotation

- Achieved 98.1% recognition accuracy from vehicle image to vehicle ID with speed of 50fps

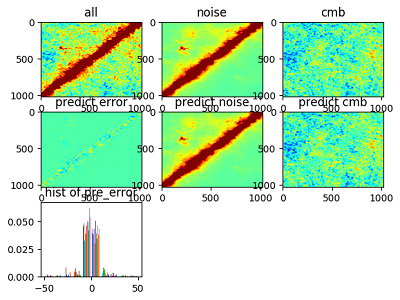

Denoising for Cosmic Microwave Background(CMB) Signal with Auto-Encoders.

In this project, my target is to remove tough noise on the data, which is called 'all'. Using the approach of Res-Connection, the signal of noise is obtained from the first autoencoder whose input is the 'all' signal. Sequentially, the difference between the 'all' signal and the 'noise' signal is the input of the next autoencoder. A fantastic result has been acquired from the stack of autoencoders.

View more on Github.

- Designed an Auto-Encoder Network using Residual Connection and Dense Connection which could efficiently reduce the background noise

- Trained two models with same architecture with MSE and MAE loss to learn the noise from original images and to learn CMB signal from the noise free images respectively; connected the two model together using residual connection

- Achieved around 450 MSE and 8 MAE on the evaluation dataset and a decent PSD compared with traditional methods

Traffic Signs Detection

This is a project about detecting traffic signs on the highway. Considering the accuracy and speed, I chose the Faster-RCNN to realize the target.

View more on Github.

Contact me if want to view more or get my data.- Designed a traffic sign detection system with high accuracy and fast speed

- Annotated 10K+ bounding boxes on 5k+ images captured in various scenes

- Trained Faster-RCNN detection model with 80% labeled data and achieved 97.4% accuracy on the test dataset

- Deployed the traffic sign detection system on Windows using QT and hosted the detection model on Linux server

Lane detection for moving vehicle

This is a project I completed during my internship at Samsung China. Because of the mobile is where the program will be used, the runtime and size are equally important. The main idea is to find the line which could be lanes and then consider the change of weight on the location of lanes. Finally, we could figure out the lane or whether the car is changing lanes.

View more on Github.

- Design an algorithm for detecting lane through in-vehicle camera in real-time with high accuracy

- Implement the algorithm designed by me using C++

- Optimize algorithm performance and reduce detection time by more efficient data structure and more elegant logic

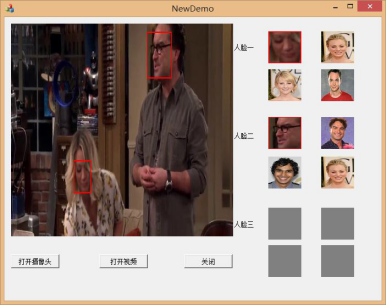

Video Face Recognition based on Deep Learning

I begin to touch deep learning with completing this project. As the project going on, I become familiar with the details of deep learning. I spend plenty of time on environment building and debugging actually. It is a big motivation to witness my face recognition model running.

Contact me if want to view more. The project is too large to be Gited because of the MFC stuff.

- Completed a demo that can show the identified face appearing in the demo video

- Designed a convoluted neural network for face recognition

- Trained CNN and adjusted parameters on the Caffe, with CASIA Webface database

- Got a further understanding of the implications sorted layers of CNN

- Achieved 96% accuracy on face recognition and the demo performance well

Handwritten Fuzzy Numeric Characters Recognition

A simple but important project for me, which triggers me to explore in deep learning and the general machine learning area. I come out with a way to classify the number in pictures, dividing every picture into parts, each of which is used to fit a Guassian distribution. Obviously, the EM mothed meets my needs well.

View more on Github.

- Build a model that can recognize the fuzzy number in pictures

- Selected the EM algorithm to complete the recognition work considering the features of numeric characters

- Completed the designed content using C++

- Achieved 93% average accuracy in the different character images